1. Reinforcement Learning Introduction

18 Sep 2019 | Reinforcement Learning

What is Reinforcement Learning?

There are 3 big part in AI

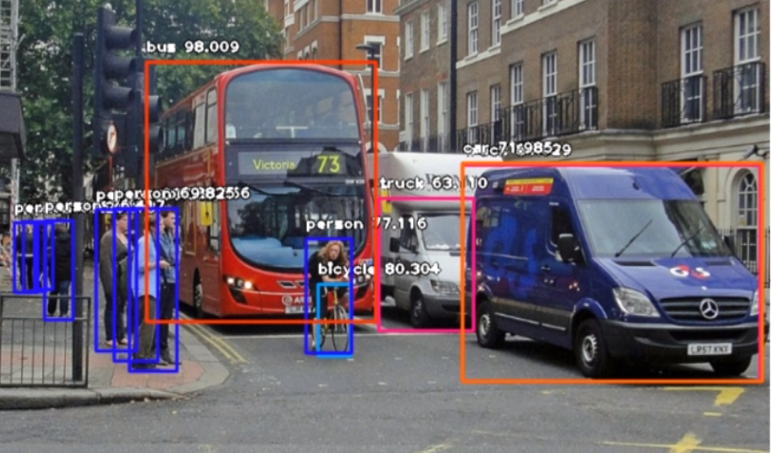

- Supervised Learning (i.e. Spam Detection, Image Classification)

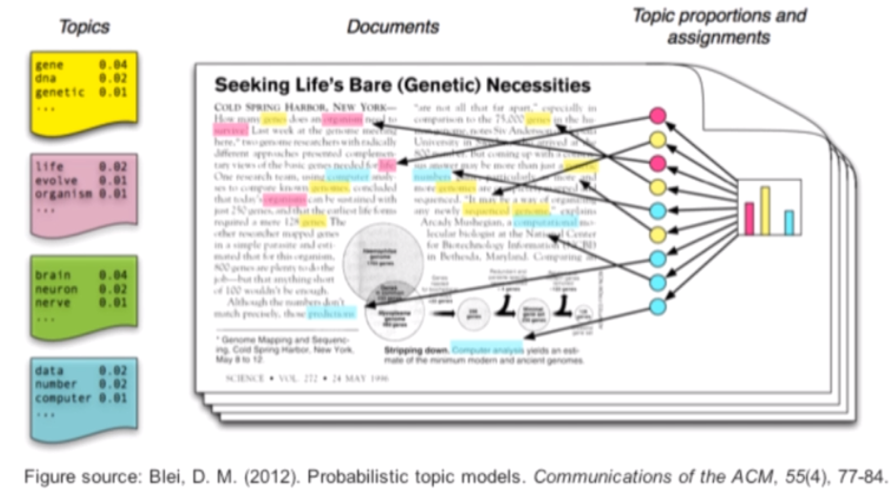

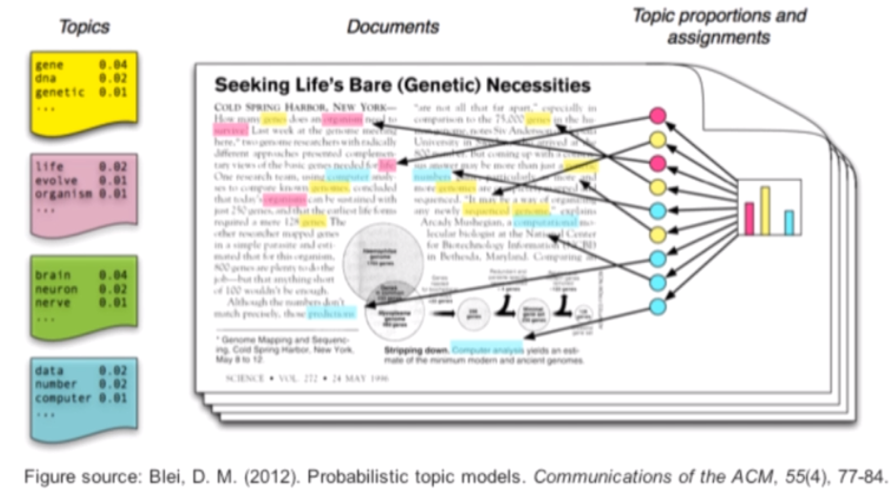

- Unsupervised Learning(i.e Topic Modeling Web Pages, Clustering Genetic Sequences)

- Reinforcement Learning(i.e Tic-Tac-Toe,Go,Chess, Walking, Super mario, Doom, Starcraft)

1. Supervised/Unsupervised Interfaces

- as we know about ML Theory and Function

class SupervisedModel:

def fit(X,y):

def predict(x):

class UnsupervisedModel:

def fit(x):

def transform(x): #(e.g cluster assignment)

- common theme is “training data”

- input data: X(N x D Matrix)

- Tragets: Y (N x 1 vector)

- “all data is the same”

- Format doesn’t change whether you’re in biology, finance, economics, etc.

- fits neatly into one library: scikit-Learn

- “simplistic”, but still useful:

- Face Detection

- Speech Recognition

2. Reinforcement Learning

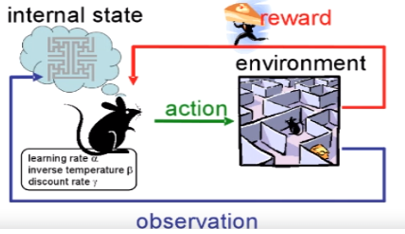

- Not just a static table of data

- An agent interacts with the world(environment)

- Can be simulated or real(E.g Vacuum robot)

- Data comes from sensors

- Cameras, Microphones, GPS, accelerometer

- Continuous stream of data

- Consider past and future

- RL agent is “thing” with a lifetime

- At each step, decide what to do

- An (un)supervised model is just a static object -> input -> output

3. isn’t it still supervised learning?

- X can represent the state i’m in, Y represent the Target (ideal action to perform in that state)

- state = sensor recording from self-driving car

- state = Video Game ScreenShot

- state = Chess Board Positions

- Yes it is, but for example, consider GO: $ N = 8 * 10^100 $

- ImageNet, the image Classification benchmark, has $ N=10^6 $ images

- Go is 94 orders of magnitude larger

- Takes ~1 day with good hardware

- 1 order of magnitude Larger -> 10 days

- 2 order of magnitude Larger -> 100 days

4. Rewards

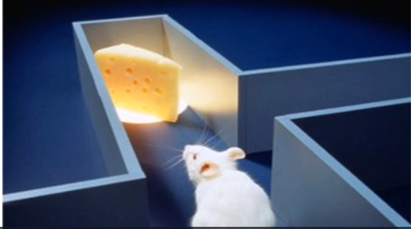

- sometimes you’ll see reference to psychology; RL has been used to model animal behavior

- RL agent’s goal is in the future

- in contrast, a supervised model simply tried to get good accuracy / minimized cost on current input

- Feedback Signals(Rewards) come from the environment (i.e the agent experiences them)

5. Rewards vs Targets

- you might think of supervised Targets/labels as something like rewards. but these handmade labels are coded by humans - they do not come from environment

- Supervised inputs/targets are just database tables

- Supervised models instantly know if it is wrong/right, because inputs + targets are provided simultaneously

- RL is dynamic - if an agent solves a maze, it only know its decisions were correct if it eventually solves the maze

6. On Unusual or Unexpected Strategies of RL

- Goal of AlphaGO is to win Go, and the goal of a video game agent is high score/live as long as possible

- what is the goal of an animal/human?

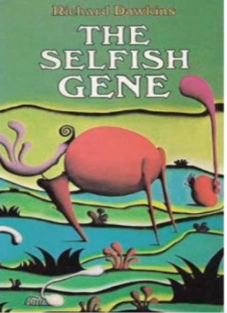

- Evolutionary psychologists believe in the “selfish Gene” Theory

- Rechard Dawkins - The Selfish Gene

- Genes simply want to make more of themselves

- We humans(conscious living beings) are totally unaware of this

- we can’t ask our genes how they feel

- we are simply a vessel for our genes’ proliferation(급증)

- is consciousness just an illusion?

-

Disconnect between what we think we want vs “true goal”

- Like Alphago, we’ve found roundabout and unlikely ways of achieving our Goal

- The action taken doesn’t necessarily have to have an obvious / explicit relationship to the Goal

- we might desire riches/money -but why? Maybe natural selection or leads to better health and social status. there are no laws physics which govern riches and gene replication

- it’s a novel solution to the problem

- AI can also find such strange or unusual ways to achieve a goal

- we can replace “getting rich” with any trait(특성) we want

- being healthy and strong

- Having strong analytical skills

- That’s a sociologist(사회학자)’s job

- Our interest lies in the fact that there are multiple novel strangies of achieving the same goal(gene replication)

- What is considered a good strategy can fluctuate

- Ex. Sugar:

- our brain runs on sugar, it gives us energy

- today, it causes disease and death

- Thus, a Strategy that seems good right now may not be globally optimal

7. Speed of Learning and Adaption

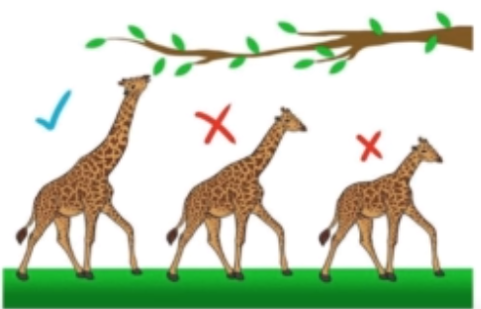

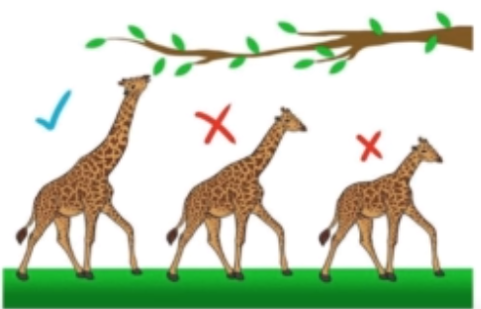

- Animals gain new traits via evolution/mutation/natural selection

- this is slow

- each newborn, even given an advantageous trait, still must learn from scratch

- AI can train via simulation

- it can spawn new offspring instantly

- obtain hundreds / thousands of years of experience in the blink of an eye

Reference:

Artificial Intelligence Reinforcement Learning

What is Reinforcement Learning?

There are 3 big part in AI

- Supervised Learning (i.e. Spam Detection, Image Classification)

- Unsupervised Learning(i.e Topic Modeling Web Pages, Clustering Genetic Sequences)

- Reinforcement Learning(i.e Tic-Tac-Toe,Go,Chess, Walking, Super mario, Doom, Starcraft)

1. Supervised/Unsupervised Interfaces

- as we know about ML Theory and Function

class SupervisedModel: def fit(X,y): def predict(x):class UnsupervisedModel: def fit(x): def transform(x): #(e.g cluster assignment) - common theme is “training data”

- input data: X(N x D Matrix)

- Tragets: Y (N x 1 vector)

- “all data is the same”

- Format doesn’t change whether you’re in biology, finance, economics, etc.

- fits neatly into one library: scikit-Learn

- “simplistic”, but still useful:

- Face Detection

- Speech Recognition

2. Reinforcement Learning

- Not just a static table of data

- An agent interacts with the world(environment)

- Can be simulated or real(E.g Vacuum robot)

- Data comes from sensors

- Cameras, Microphones, GPS, accelerometer

- Continuous stream of data

- Consider past and future

- RL agent is “thing” with a lifetime

- At each step, decide what to do

- An (un)supervised model is just a static object -> input -> output

3. isn’t it still supervised learning?

- X can represent the state i’m in, Y represent the Target (ideal action to perform in that state)

- state = sensor recording from self-driving car

- state = Video Game ScreenShot

- state = Chess Board Positions

- Yes it is, but for example, consider GO: $ N = 8 * 10^100 $

- ImageNet, the image Classification benchmark, has $ N=10^6 $ images

- Go is 94 orders of magnitude larger

- Takes ~1 day with good hardware

- 1 order of magnitude Larger -> 10 days

- 2 order of magnitude Larger -> 100 days

4. Rewards

- sometimes you’ll see reference to psychology; RL has been used to model animal behavior

- RL agent’s goal is in the future

- in contrast, a supervised model simply tried to get good accuracy / minimized cost on current input

- Feedback Signals(Rewards) come from the environment (i.e the agent experiences them)

5. Rewards vs Targets

- you might think of supervised Targets/labels as something like rewards. but these handmade labels are coded by humans - they do not come from environment

- Supervised inputs/targets are just database tables

- Supervised models instantly know if it is wrong/right, because inputs + targets are provided simultaneously

- RL is dynamic - if an agent solves a maze, it only know its decisions were correct if it eventually solves the maze

6. On Unusual or Unexpected Strategies of RL

- Goal of AlphaGO is to win Go, and the goal of a video game agent is high score/live as long as possible

- what is the goal of an animal/human?

- Evolutionary psychologists believe in the “selfish Gene” Theory

- Rechard Dawkins - The Selfish Gene

- Genes simply want to make more of themselves

- We humans(conscious living beings) are totally unaware of this

- we can’t ask our genes how they feel

- we are simply a vessel for our genes’ proliferation(급증)

- is consciousness just an illusion?

-

Disconnect between what we think we want vs “true goal”

- Like Alphago, we’ve found roundabout and unlikely ways of achieving our Goal

- The action taken doesn’t necessarily have to have an obvious / explicit relationship to the Goal

- we might desire riches/money -but why? Maybe natural selection or leads to better health and social status. there are no laws physics which govern riches and gene replication

- it’s a novel solution to the problem

- AI can also find such strange or unusual ways to achieve a goal

- we can replace “getting rich” with any trait(특성) we want

- being healthy and strong

- Having strong analytical skills

- That’s a sociologist(사회학자)’s job

- Our interest lies in the fact that there are multiple novel strangies of achieving the same goal(gene replication)

- What is considered a good strategy can fluctuate

- Ex. Sugar:

- our brain runs on sugar, it gives us energy

- today, it causes disease and death

- Thus, a Strategy that seems good right now may not be globally optimal

7. Speed of Learning and Adaption

- Animals gain new traits via evolution/mutation/natural selection

- this is slow

- each newborn, even given an advantageous trait, still must learn from scratch

- AI can train via simulation

- it can spawn new offspring instantly

- obtain hundreds / thousands of years of experience in the blink of an eye

Reference:

Artificial Intelligence Reinforcement Learning

Comments