16. DeepLearning Overview

02 Oct 2019 | Reinforcement Learning

Deep Learning

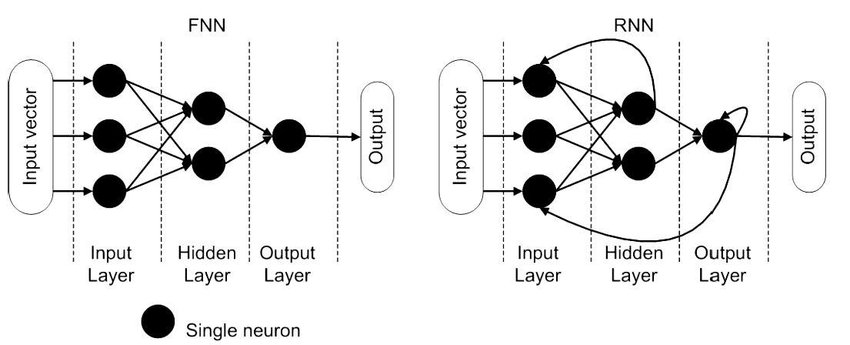

- Deep Learning is a name for Neural network

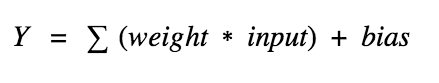

- in its simplest form, it’s just a bunch of logistic regressions stacked together

- layers in between input and output are called hidden leyers

- we call this network a “feedforward neural network”

- Nonlinear activation functions (f) make it a nonlinear function approximator

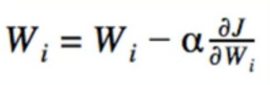

Training

- Despite the complexity, training hasn’t changed since logistic regression, we still just do gradient descent

- problem: not as robust with deep networks. sensitive to hyperparameters:

- Learning rate, # hidden units, # hidden layers, activation fcn, optimizer(AdaGrad, RMSprop, Adam, etc) which have their own hyperparameters

- we won’t know what works until we try

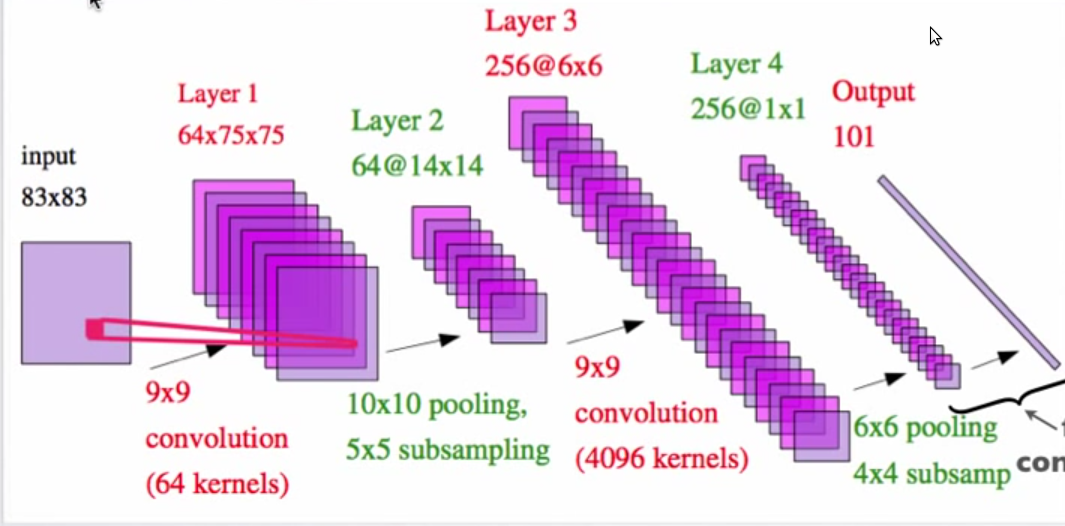

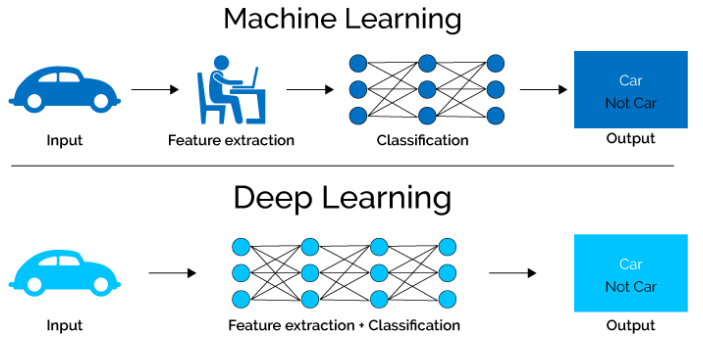

Feature Engineering

- As with all ML models, input is a feature vector x

- Neural networks are nice because they save us from having to do lots of manual feature engineering

- Nonlinear characteristics of NNs have been shown to learn features automatically and hierarchically between layers

- Ex

- layer 1 : edges

- layer 2 : groups of edges

- layer 3 : eye, nose, lips, ears

- layer 4 : entire faces

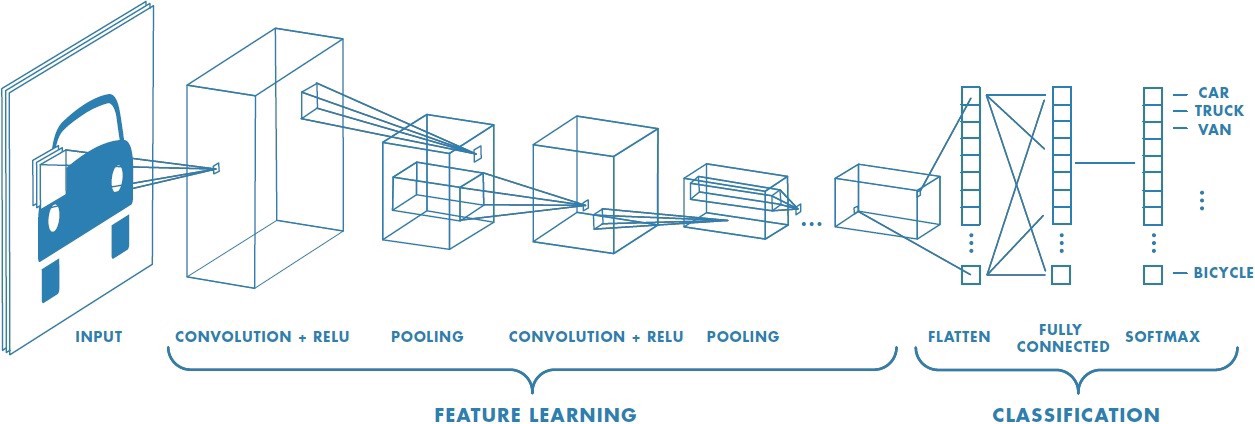

Working with images

- as a human, one of our main sensory(感觉的) input

- as a robot navigating the real-world, images are also one of our main sensory input

- images also make up states in video games

- thus we’ll need to know how to work with images to play Atary environments in Open Gym

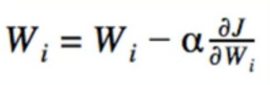

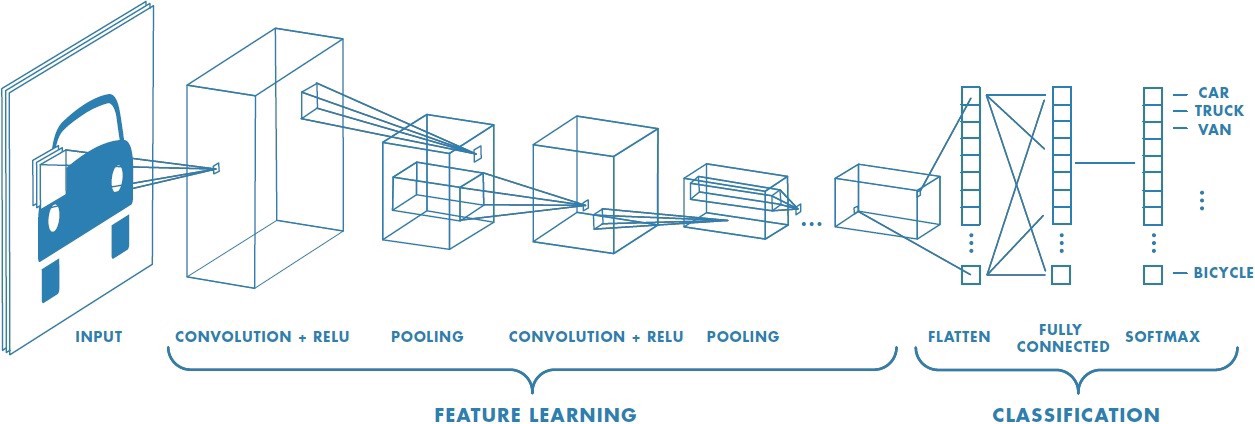

- Luckily we have tools for this: Convolutional neural network

- Does 2-D Convolutions before the fully-connected layers

- (All layers in a feedforward network are fully-connected)

- Convolutional Layers have filter which are mush smaller than the image

- Also, instead of working with 1-D feature vectors, we work with the 2-D image directly(3-D if color)

- idea: slide kernal/filter across the image and multiply by a patch to get output

- Aside from the sliding, it works exactly like a fully-connected layer

- Multiplication and nonlinear activation

- Concept of shared weights

- smaller # of parameters, takes up less space and trains faster

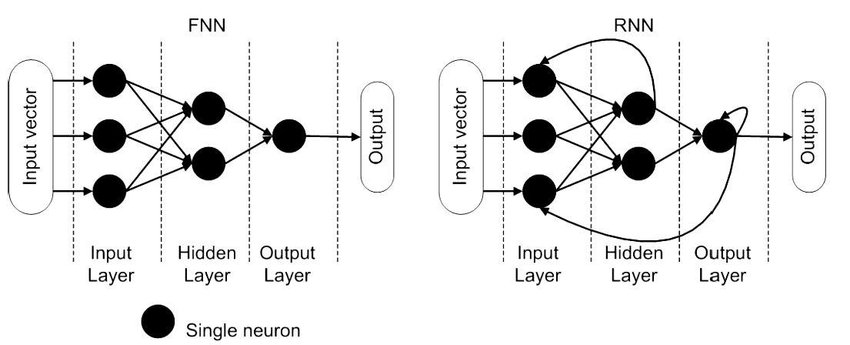

Working with sequences

- In RL, not only are we interested in images, but sequences too

- Main tool: recurrent neural networks

- Episode is made of sequence of states, actions and rewards

- Any Network where a node loops back to an earlier node is recurrent

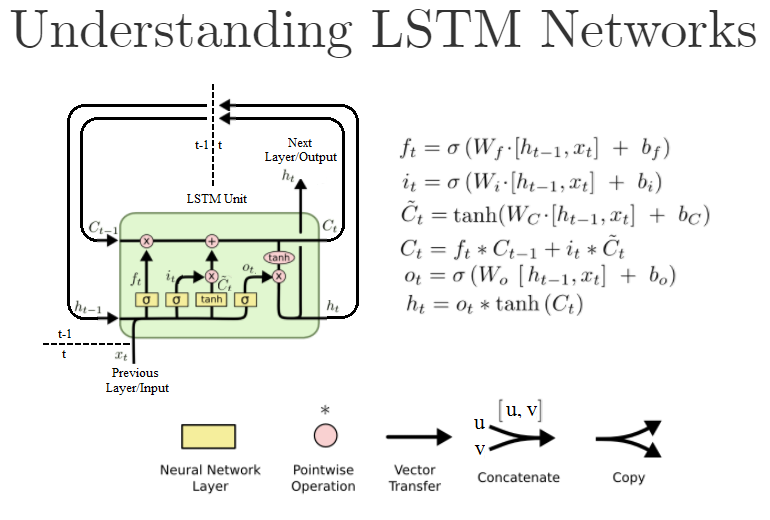

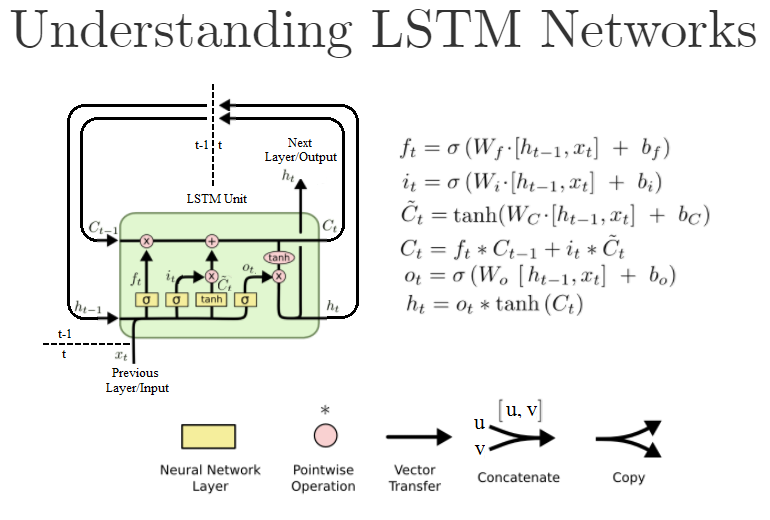

- Typically don’t think of individual recurrent connections, but recurrent layers or units, e.g LSTM or GRU

- we think of them as black boxs: input -> box -> outpu

- since output depends on previous inputs, it means the black box has “memory”

- useful because we can make decisions based on previous frames/ states

Classification vs Regression

- we mostly focused on multi-class classification

- softmax on multiple output nodes + cross entropy

- in RL we want to predict a real-value scalar(the value function)

- one output node + squared error

Reference:

Artificial Intelligence Reinforcement Learning

Deep Learning

- Deep Learning is a name for Neural network

- in its simplest form, it’s just a bunch of logistic regressions stacked together

- layers in between input and output are called hidden leyers

- we call this network a “feedforward neural network”

- Nonlinear activation functions (f) make it a nonlinear function approximator

Training

- Despite the complexity, training hasn’t changed since logistic regression, we still just do gradient descent

- problem: not as robust with deep networks. sensitive to hyperparameters:

- Learning rate, # hidden units, # hidden layers, activation fcn, optimizer(AdaGrad, RMSprop, Adam, etc) which have their own hyperparameters

- we won’t know what works until we try

Feature Engineering

- As with all ML models, input is a feature vector x

- Neural networks are nice because they save us from having to do lots of manual feature engineering

- Nonlinear characteristics of NNs have been shown to learn features automatically and hierarchically between layers

- Ex

- layer 1 : edges

- layer 2 : groups of edges

- layer 3 : eye, nose, lips, ears

- layer 4 : entire faces

Working with images

- as a human, one of our main sensory(感觉的) input

- as a robot navigating the real-world, images are also one of our main sensory input

- images also make up states in video games

- thus we’ll need to know how to work with images to play Atary environments in Open Gym

- Luckily we have tools for this: Convolutional neural network

- Does 2-D Convolutions before the fully-connected layers

- (All layers in a feedforward network are fully-connected)

- Convolutional Layers have filter which are mush smaller than the image

- Also, instead of working with 1-D feature vectors, we work with the 2-D image directly(3-D if color)

- idea: slide kernal/filter across the image and multiply by a patch to get output

- Aside from the sliding, it works exactly like a fully-connected layer

- Multiplication and nonlinear activation

- Concept of shared weights

- smaller # of parameters, takes up less space and trains faster

Working with sequences

- In RL, not only are we interested in images, but sequences too

- Main tool: recurrent neural networks

- Episode is made of sequence of states, actions and rewards

- Any Network where a node loops back to an earlier node is recurrent

- Typically don’t think of individual recurrent connections, but recurrent layers or units, e.g LSTM or GRU

- we think of them as black boxs: input -> box -> outpu

- since output depends on previous inputs, it means the black box has “memory”

- useful because we can make decisions based on previous frames/ states

Classification vs Regression

- we mostly focused on multi-class classification

- softmax on multiple output nodes + cross entropy

- in RL we want to predict a real-value scalar(the value function)

- one output node + squared error

Reference:

Artificial Intelligence Reinforcement Learning

Comments