15.CPU and Cache Memory

19 May 2020 | CS

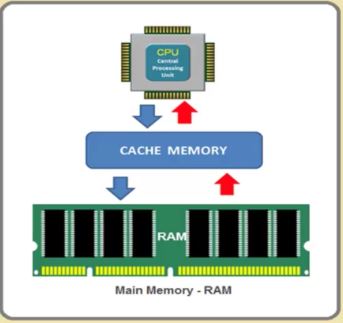

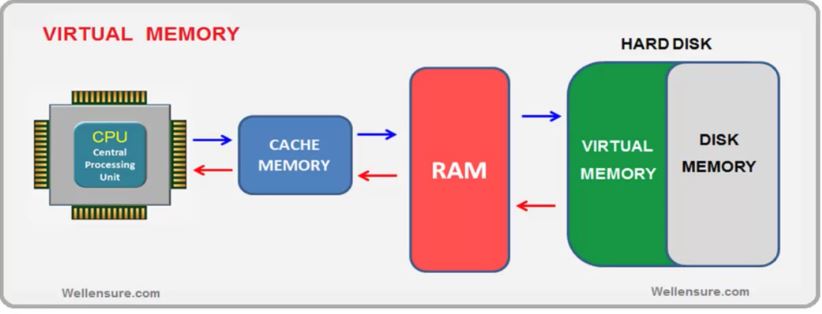

Cache Memory

-

in the early decades of computing, main memory RAM was extremely slow and incredibly expensive. the CPUs were also slow.

-

starting in the 1980s, the gap began to widen very quickly. Microprocessor clock speeds took off but memory access times improved far less dramatically.

-

as this gap grew, it bcame increasingly clear that a new type of fast memory was needed to bridge the gap.

-

Almost all modern CPU makes use of cache memory which significantly improves the CPU’s performance. the cache memory(caching) was invented to bridge the performance gap between CPU and main memory

-

the primary goal of the cache memory system is to ensure that the CPU has the next set of data and instruction it will need, 3 already loaded into cache memory by the time CPU goes looking for it (also called a cache hit)

-

during program execution the CPU will first search L1 cache for the next instruction and data to be executed. if CPU fails to find, then it will look for the same in L2 cache. the CPU will continue the search in L3 cache and then to the main memory, til the required data and instruction is fetched for execution.

-

therefore, from CPU perforamnce point of view higher hit rate for L1 cache is better.

-

the CPU is amazingly small considering the immense (巨大的) amount of circuitry it contains. the circuits of a CPU are made of logic gates. the Gates, however are also made of tiny components called a transisor, and a modern CPU has millions and millions of these transistor in its circuitry

-

the CPU is composed of components: Memory Unit(Cache L1 & Registers), buses, ALU-arithmetic Logic Unit and CU- Control Unit.

-

A special heatsink is installed on the top of the processor chip with a cooling fan in order to protect the CPU chip from the excess heat generation.

-

Digital circuits depend heavily on transitors for automation. Transistors are the reason that digital systems can perform logical functions by using logic gates.

CPU internal components

-

the CPU is primarily responsiblt to do all the calculations, decision making and controlling other parts of the computer system.

-

the CPU is a single Microprocessor chip which performs all these functions

- ALU : arithmetic logic unit

- CU - control unit

- Memory Unit.

CU

-

the Control unit(CU) is one of the components of a computer’s central processing unit(CPU) that directs the operations of the processor.

-

the control unit directs the computer’s memory, arithmetic & logic unit, input and output devices on how to respond to the program’s instruction during the program executing.

-

the control unit directs the operation of the other units by providing timing and control signals.

-

the control unit is typically an internal part of the CPU with its overall role and operation unchanged since it instroduction.

-

most computer resources are managed by the control unit. it also direct the flow of data between the CPU and the other devices.

-

the control unit coordinates and controls all the components of a computer system. the control unit within the CPU intiates the program execution by feting the program instruction from the main system memory RAM to the CPU’s internal memory Registers

-

the control unit also directs the operation of the other units by providing timing (clock) and control signals.

-

the control unit(CU) controls, coordinates and synchronizes the acitivities and operation performed by the of the computer system.

-

the CU acts as nerve centre(控制中心) for entire computer system

-

the CU controls the system operations by routing the selected data items to the selected processing hardware at the right time.

-

the decode section within the control unit decodes the program instructions in binary as per the CPU’s instruction set architecture.

-

the instruction pipelining is a technique used to optimize the CPU’s processing power. the basic idea is to split the processor instruction into a series of small independent stage(Fetch, Decode, Execute, Store). Each stage is designed to perform a certain part of the instruction cycle.

-

in simpler CPUs the instruction cycle is executed sequentially and each instruction being processed before the next one is started. in most modern CPUs the instruction cycles are instead exeucted concurrently and often in parallel, through an instruction pipeline, the next instruction processing starts even before the previous instruction has finished which results improved CPU perforamnce.

ALU

-

The ALU is a complex digital circuit and one of many components within a computer’s CPU

-

The ALU performs both bitwise and mathematical operations on binary numbers and is the last component to perform calculations in the procssor. the ALU uses to operands and OP-code that tells it which operations to perform on the inpud data. After the information hase beend processed by The ALU, it is sent to the main memory(RAM).

-

the ALU stands for arithmetic Logic Unit is a digital circuit inbuilt within the CPU which is responsible to perform the arithmetic and logical operations.

-

the ALU is a fundamental building block of the CPU and integral part of almost all the Microprocessor

-

the modern processors are multi core and can contain number of very powerful and complex ALUs in built within singe processor chip.

-

THe ALU drives the processing power of all moderan CPU and GPU(Graphics processing Unit). Each of these CPU and GPU can consist of one or more very powerful and complex ALU’s performing the millions of arithmetic and logical operaions per second.

-

in some computer processors, the ALU is divided into an AU and LU. the AU performs the arithmetic operations and the LU performs the logical operations.

-

most of the operations of a CPU are performed by one or more ALUs, which load data from input registers . A register is a small amount of high speed memory storage available as part of a CPU.

-

the control unit directs the ALU, what operation to be performed on that data and the ALU stores the result in an output register. the control unit moves the data between these register, the ALU and main memory RAM.

Cache Memory

-

in the early decades of computing, main memory RAM was extremely slow and incredibly expensive. the CPUs were also slow.

-

starting in the 1980s, the gap began to widen very quickly. Microprocessor clock speeds took off but memory access times improved far less dramatically.

-

as this gap grew, it bcame increasingly clear that a new type of fast memory was needed to bridge the gap.

-

Almost all modern CPU makes use of cache memory which significantly improves the CPU’s performance. the cache memory(caching) was invented to bridge the performance gap between CPU and main memory

-

the primary goal of the cache memory system is to ensure that the CPU has the next set of data and instruction it will need, 3 already loaded into cache memory by the time CPU goes looking for it (also called a cache hit)

-

during program execution the CPU will first search L1 cache for the next instruction and data to be executed. if CPU fails to find, then it will look for the same in L2 cache. the CPU will continue the search in L3 cache and then to the main memory, til the required data and instruction is fetched for execution.

-

therefore, from CPU perforamnce point of view higher hit rate for L1 cache is better.

-

the CPU is amazingly small considering the immense (巨大的) amount of circuitry it contains. the circuits of a CPU are made of logic gates. the Gates, however are also made of tiny components called a transisor, and a modern CPU has millions and millions of these transistor in its circuitry

-

the CPU is composed of components: Memory Unit(Cache L1 & Registers), buses, ALU-arithmetic Logic Unit and CU- Control Unit.

-

A special heatsink is installed on the top of the processor chip with a cooling fan in order to protect the CPU chip from the excess heat generation.

-

Digital circuits depend heavily on transitors for automation. Transistors are the reason that digital systems can perform logical functions by using logic gates.

CPU internal components

-

the CPU is primarily responsiblt to do all the calculations, decision making and controlling other parts of the computer system.

-

the CPU is a single Microprocessor chip which performs all these functions

- ALU : arithmetic logic unit

- CU - control unit

- Memory Unit.

CU

-

the Control unit(CU) is one of the components of a computer’s central processing unit(CPU) that directs the operations of the processor.

-

the control unit directs the computer’s memory, arithmetic & logic unit, input and output devices on how to respond to the program’s instruction during the program executing.

-

the control unit directs the operation of the other units by providing timing and control signals.

-

the control unit is typically an internal part of the CPU with its overall role and operation unchanged since it instroduction.

-

most computer resources are managed by the control unit. it also direct the flow of data between the CPU and the other devices.

-

the control unit coordinates and controls all the components of a computer system. the control unit within the CPU intiates the program execution by feting the program instruction from the main system memory RAM to the CPU’s internal memory Registers

-

the control unit also directs the operation of the other units by providing timing (clock) and control signals.

-

the control unit(CU) controls, coordinates and synchronizes the acitivities and operation performed by the of the computer system.

-

the CU acts as nerve centre(控制中心) for entire computer system

-

the CU controls the system operations by routing the selected data items to the selected processing hardware at the right time.

-

the decode section within the control unit decodes the program instructions in binary as per the CPU’s instruction set architecture.

-

the instruction pipelining is a technique used to optimize the CPU’s processing power. the basic idea is to split the processor instruction into a series of small independent stage(Fetch, Decode, Execute, Store). Each stage is designed to perform a certain part of the instruction cycle.

-

in simpler CPUs the instruction cycle is executed sequentially and each instruction being processed before the next one is started. in most modern CPUs the instruction cycles are instead exeucted concurrently and often in parallel, through an instruction pipeline, the next instruction processing starts even before the previous instruction has finished which results improved CPU perforamnce.

ALU

-

The ALU is a complex digital circuit and one of many components within a computer’s CPU

-

The ALU performs both bitwise and mathematical operations on binary numbers and is the last component to perform calculations in the procssor. the ALU uses to operands and OP-code that tells it which operations to perform on the inpud data. After the information hase beend processed by The ALU, it is sent to the main memory(RAM).

-

the ALU stands for arithmetic Logic Unit is a digital circuit inbuilt within the CPU which is responsible to perform the arithmetic and logical operations.

-

the ALU is a fundamental building block of the CPU and integral part of almost all the Microprocessor

-

the modern processors are multi core and can contain number of very powerful and complex ALUs in built within singe processor chip.

-

THe ALU drives the processing power of all moderan CPU and GPU(Graphics processing Unit). Each of these CPU and GPU can consist of one or more very powerful and complex ALU’s performing the millions of arithmetic and logical operaions per second.

-

in some computer processors, the ALU is divided into an AU and LU. the AU performs the arithmetic operations and the LU performs the logical operations.

-

most of the operations of a CPU are performed by one or more ALUs, which load data from input registers . A register is a small amount of high speed memory storage available as part of a CPU.

-

the control unit directs the ALU, what operation to be performed on that data and the ALU stores the result in an output register. the control unit moves the data between these register, the ALU and main memory RAM.