25. Custom Exceptions

25 Sep 2019 | C++

Example 1 (Basic)

#include <iostream>

#include <string>

using namespace std;

void mightGoWrong() {

bool error1 = false;

bool error2 = true;

if(error1) {

throw "Something went wrong.";

}

if(error2) {

//throw string("Something else went wrong.");

throw int ("4");

}

}

void usesMightGoWrong() {

mightGoWrong();

}

int main() {

try {

usesMightGoWrong();

}

catch(int e) { // the throwing the value catched and apply to "int e"

cout << "Error code: " << e << endl;

}

catch(char const * e) { // character, so it save one by one alpabet in one matrix place

cout << "Error message: " << e << endl;

}

catch(string &e) { // c++ does not gracefully handle situation where there are two exceptions active at the same time in string.

//it imeediatley terminates the program without calling any destructor

cout << "string error message: " << e << endl;

}

cout << "Still running" << endl;

return 0;

}

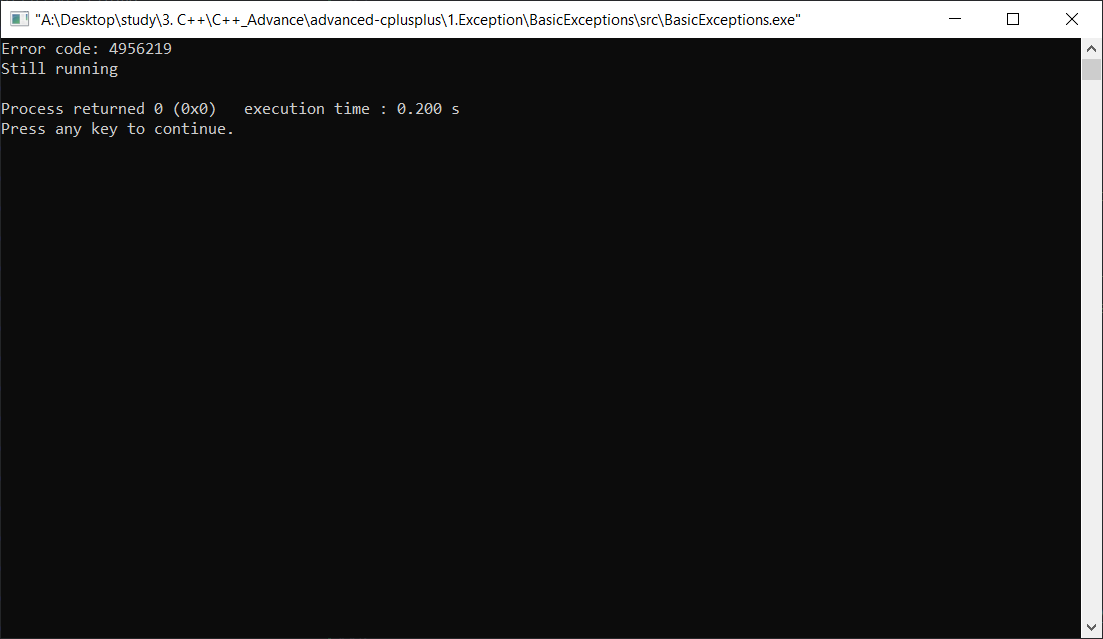

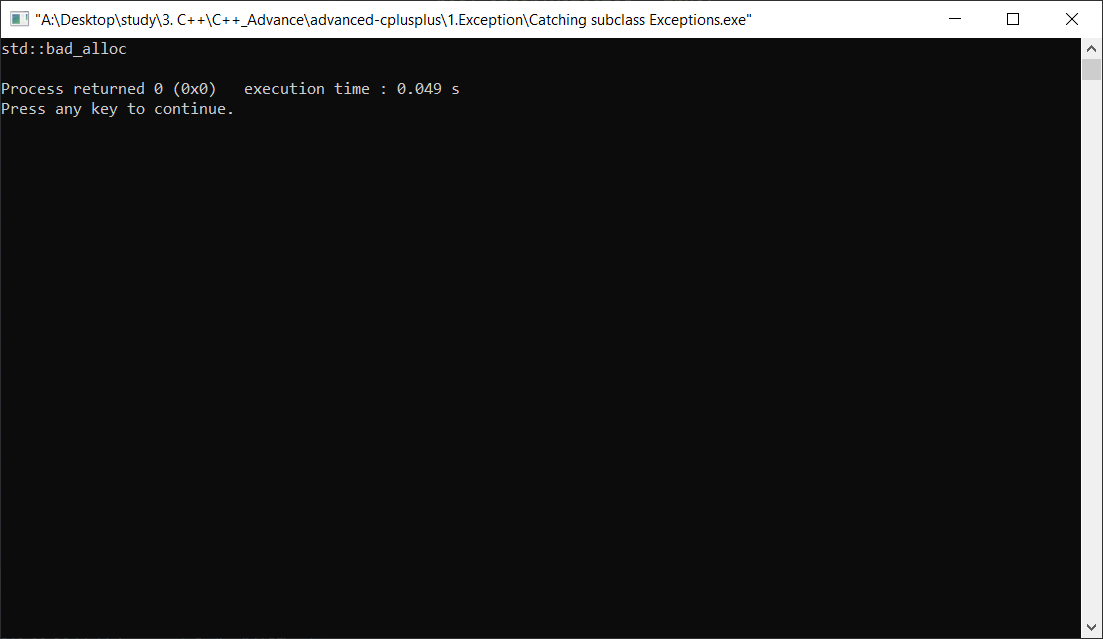

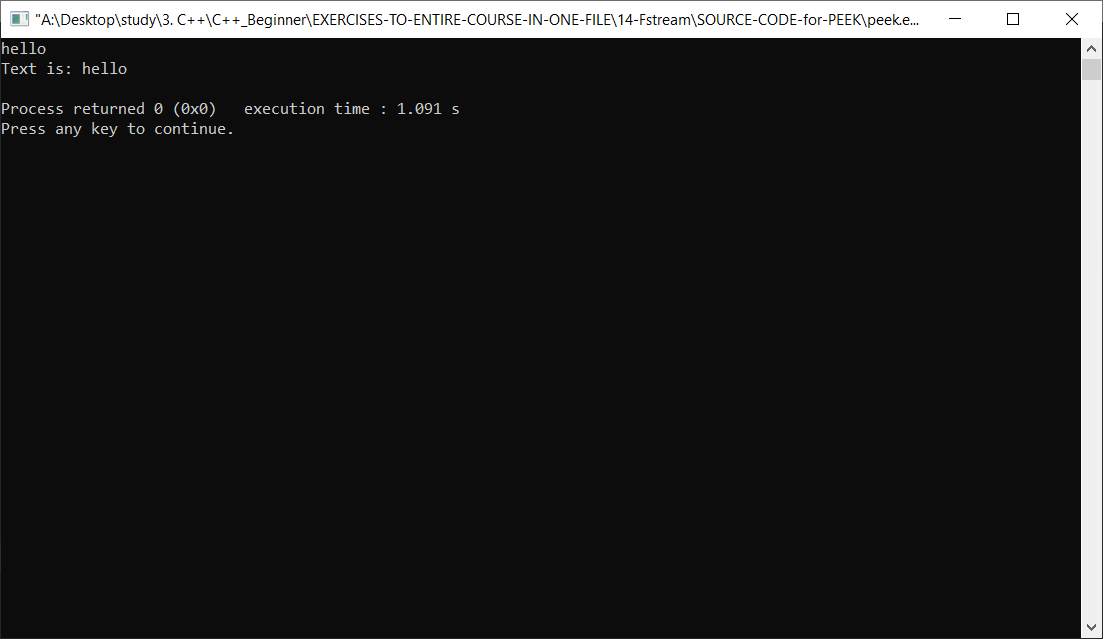

Result

Example 2(Custom Exception)

#include <iostream>

#include <exception>

using namespace std;

class MyException: public exception {

//public exepction, is that inherit exception class

public:

virtual const char* what() const throw(){

//can not throw the exception.

//it is the optimize the way

//it halt the time.

return "Something bad happened!";

}

};

class Test {

public:

void goesWrong() {

throw MyException();

}

};

int main() {

Test test;

try {

test.goesWrong();

}

catch(MyException &e) {

cout << e.what() << endl;

}

return 0;

}

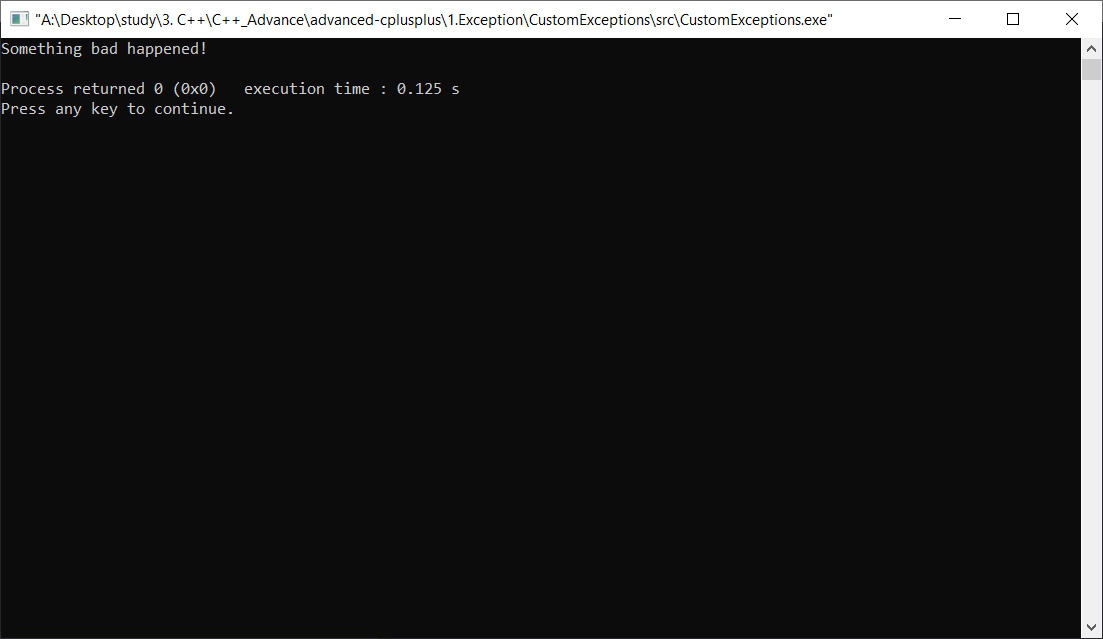

Result

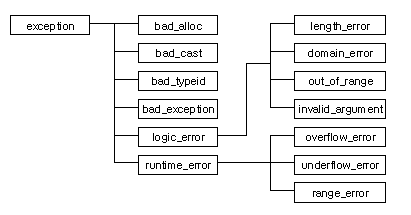

Example 3(standard Exception)

- bad_alloic is hesitate to iostream of exception.h

#include <iostream>

using namespace std;

class CanGoWrong {

public:

CanGoWrong() {

char *pMemory = new char[91231312312312999999999999999]; // this is the memory to handle

// new char[999] number of bite

delete[] pMemory;

}

};

int main() {

try {

CanGoWrong wrong;

}

catch(bad_alloc &e) {

//bad_alloc is the catching up the error

//bad_alloc is name of class is to pick a convetnion and stick to it

cout << "Caught exception: " << e.what() << endl;

}

cout << "Still running" << endl;

return 0;

}

Example 4(Catching subclass Execptions)

#include <iostream>

#include <exception>

using namespace std;

void goeswrong (){

bool error1Detected = true;

bool error2Detected = false;

if(error1Detected){

throw bad_alloc();

}

if(error2Detected){

throw exception();

}

}

int main(){

try{

goeswrong();

}

catch(exception &e){

cout<<e.what()<<endl;

// what is the state of ".e"

// like saying yeah that is std::exception.

}

catch(bad_alloc &e){

cout<<e.what()<<endl;

}

return 0;

}

Example 1 (Basic)

#include <iostream>

#include <string>

using namespace std;

void mightGoWrong() {

bool error1 = false;

bool error2 = true;

if(error1) {

throw "Something went wrong.";

}

if(error2) {

//throw string("Something else went wrong.");

throw int ("4");

}

}

void usesMightGoWrong() {

mightGoWrong();

}

int main() {

try {

usesMightGoWrong();

}

catch(int e) { // the throwing the value catched and apply to "int e"

cout << "Error code: " << e << endl;

}

catch(char const * e) { // character, so it save one by one alpabet in one matrix place

cout << "Error message: " << e << endl;

}

catch(string &e) { // c++ does not gracefully handle situation where there are two exceptions active at the same time in string.

//it imeediatley terminates the program without calling any destructor

cout << "string error message: " << e << endl;

}

cout << "Still running" << endl;

return 0;

}

Result

Example 2(Custom Exception)

#include <iostream>

#include <exception>

using namespace std;

class MyException: public exception {

//public exepction, is that inherit exception class

public:

virtual const char* what() const throw(){

//can not throw the exception.

//it is the optimize the way

//it halt the time.

return "Something bad happened!";

}

};

class Test {

public:

void goesWrong() {

throw MyException();

}

};

int main() {

Test test;

try {

test.goesWrong();

}

catch(MyException &e) {

cout << e.what() << endl;

}

return 0;

}

Result

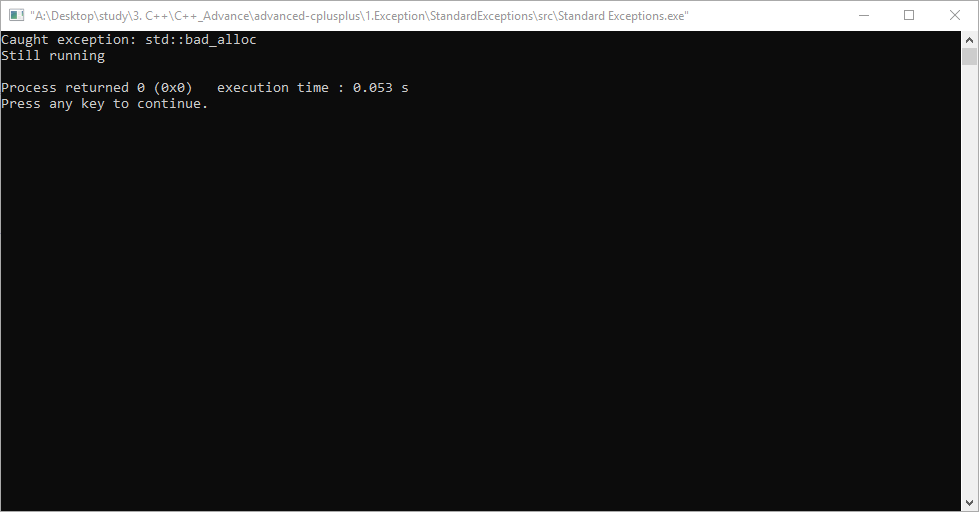

Example 3(standard Exception)

- bad_alloic is hesitate to iostream of exception.h

#include <iostream>

using namespace std;

class CanGoWrong {

public:

CanGoWrong() {

char *pMemory = new char[91231312312312999999999999999]; // this is the memory to handle

// new char[999] number of bite

delete[] pMemory;

}

};

int main() {

try {

CanGoWrong wrong;

}

catch(bad_alloc &e) {

//bad_alloc is the catching up the error

//bad_alloc is name of class is to pick a convetnion and stick to it

cout << "Caught exception: " << e.what() << endl;

}

cout << "Still running" << endl;

return 0;

}

Example 4(Catching subclass Execptions)

#include <iostream>

#include <exception>

using namespace std;

void goeswrong (){

bool error1Detected = true;

bool error2Detected = false;

if(error1Detected){

throw bad_alloc();

}

if(error2Detected){

throw exception();

}

}

int main(){

try{

goeswrong();

}

catch(exception &e){

cout<<e.what()<<endl;

// what is the state of ".e"

// like saying yeah that is std::exception.

}

catch(bad_alloc &e){

cout<<e.what()<<endl;

}

return 0;

}