8. Stack

08 May 2020 | Data structure_1

Introduction

- Now that we know the basic data structures, we can begin using them to build different, more helpful data structures.

Why is it Important?

- Stacks are used for a variety of operations in computer science. They can do anything from helping to navigate through a maze, to helping traverse a graph.

Lesson Notes

-

Stacks are a data structure that are built off the backs of other data structures. They work off a set of important rules.

-

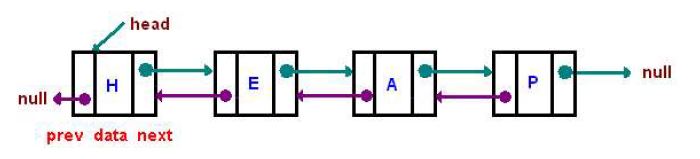

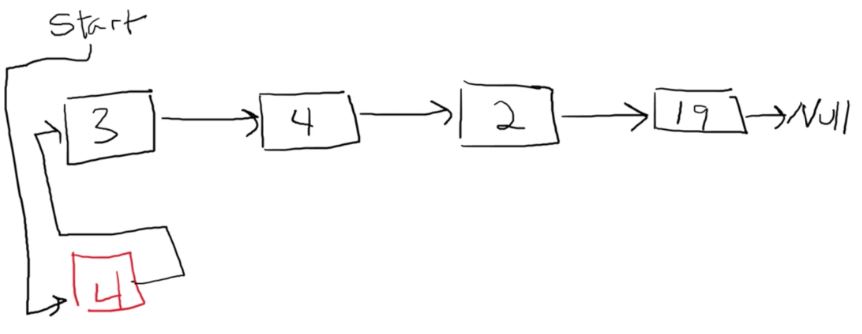

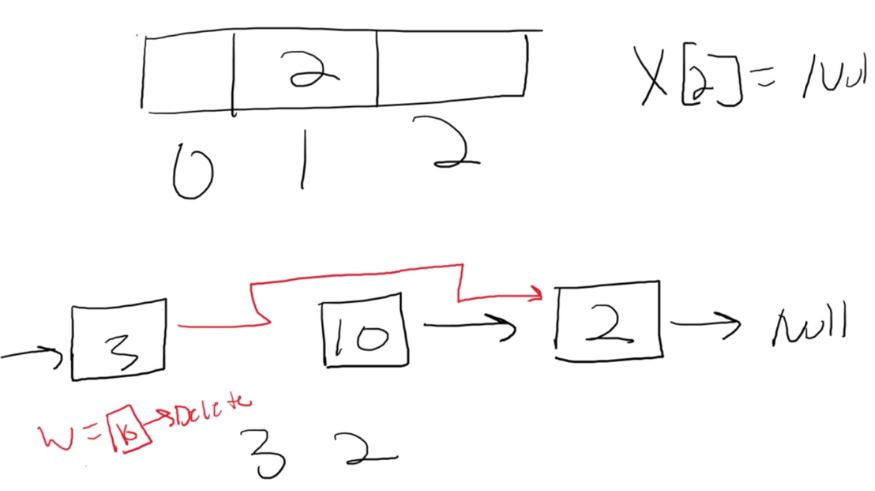

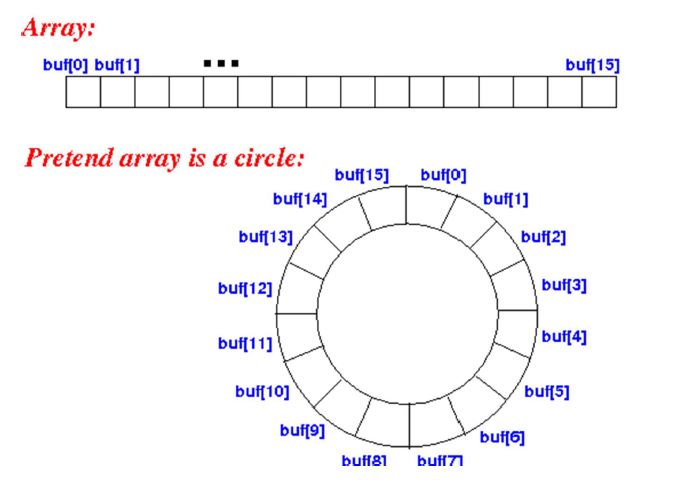

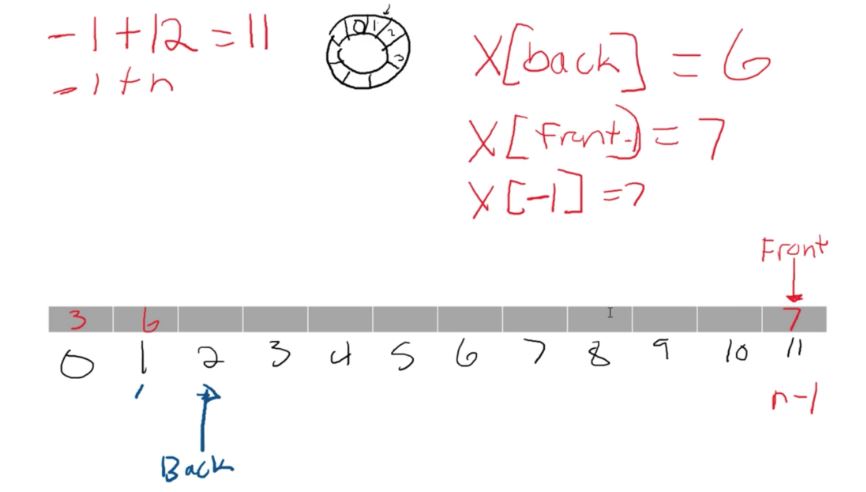

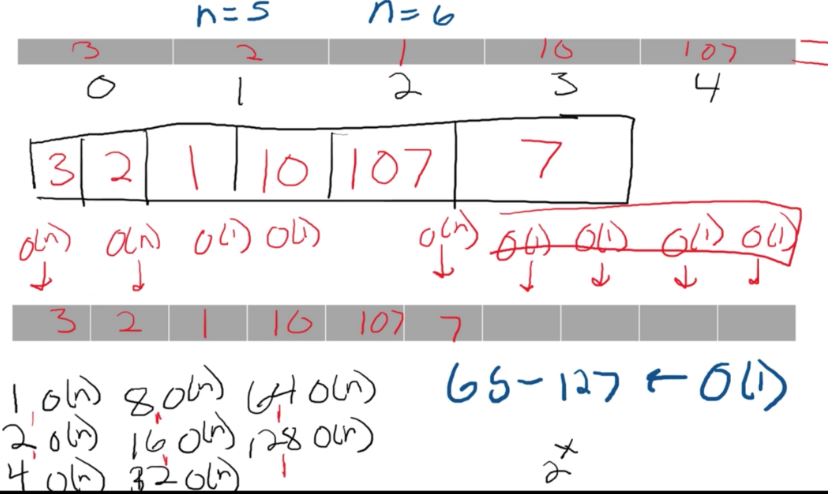

These rules are usually applied to either a linked list or array. It’s like a specialization. Through the rules we are able to create a specialized structure with new pros and cons.

-

A stack works like a stack of trays in the cafeteria. You always grab from the top, not the bottom. This means you will have a relationship where the most recent element that has come, will be the first serviced.

-

Let’s say 100 items come into a stack in order. So 1 is inserted, then 2, then 3, etc. In this case, 100 would be “served” first, then 99, then 98, then 97 and so on. This is helpful for something like an undo system on an application. Every step you make is inserted onto the stack. Then whenever you want to undo, you just “pop” off the top of the stack to go back in time.

-

With this pattern, we now have what is known as a “Last in First Out (LIFO)” relationship. This is also commonly referred to as a “Last Come, First Served(LCFS)” relationship.

-

Going along with the terminology. These are some key terms to know when working with stacks and queues.

-

Pop - To remove an element from a stack. (Can sometimes be used for queues).

-

Push - To insert an element onto a stack. (Can sometimes be used for queues).

-

Enqueue - To insert an element onto a queue

-

Dequeue - To remove an element from a Queue.

-

FIFO - Means “First in First Out” It’s the technical term for a queue-like data structure.

-

LIFO - Means “Last In First Out”. It’s the technical term for a stack-like data structure.

-

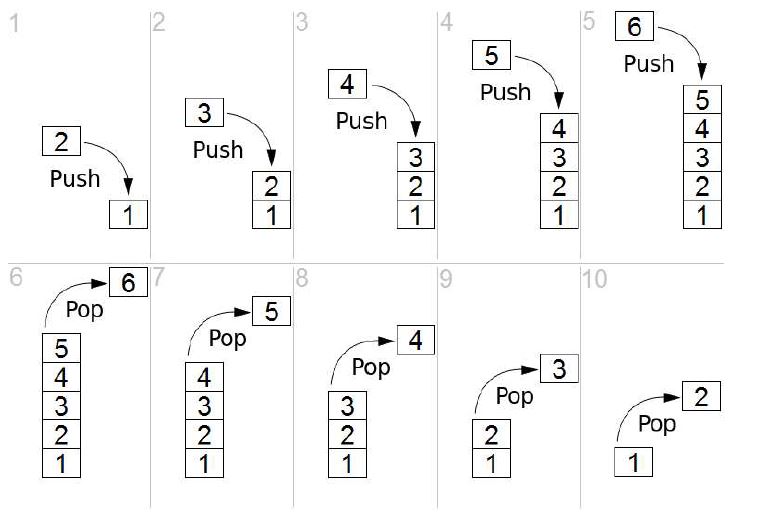

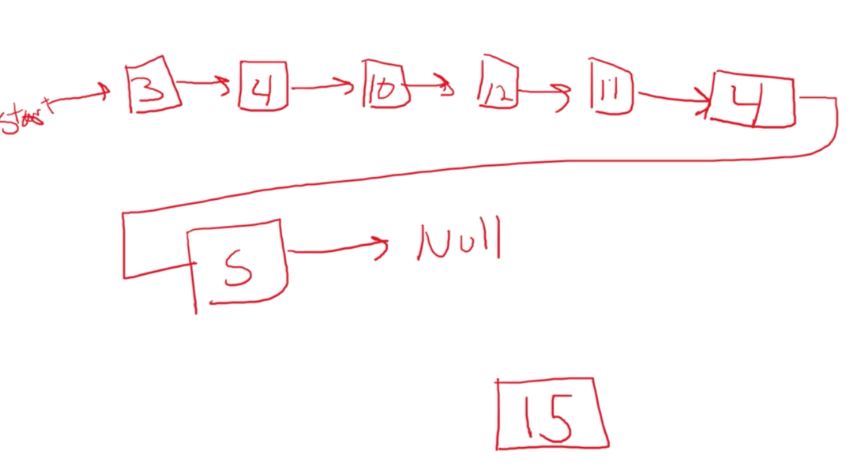

Let’s take a look at how a stack operates through an example:

-

Notice how it looks like we are “stacking” books or trays up. We keep stacking. Then when we want to remove one, we take it off the top. We never remove from anywhere else in a stack!

-

Now, if we kept popping and pushing in a loop, we would get a situation where number 1 could potentially never be touched again. For this reason, these aren’t typically used for scheduling purposes. Imagine if you used a stack to run your computer. Old windows would be frozen indefinitely as new requests keep getting serviced first.

-

In the next set of lessons, we will cover a data structure that is more apt to deal with situations like this.

Introduction

- Now that we know the basic data structures, we can begin using them to build different, more helpful data structures.

Why is it Important?

- Stacks are used for a variety of operations in computer science. They can do anything from helping to navigate through a maze, to helping traverse a graph.

Lesson Notes

-

Stacks are a data structure that are built off the backs of other data structures. They work off a set of important rules.

-

These rules are usually applied to either a linked list or array. It’s like a specialization. Through the rules we are able to create a specialized structure with new pros and cons.

-

A stack works like a stack of trays in the cafeteria. You always grab from the top, not the bottom. This means you will have a relationship where the most recent element that has come, will be the first serviced.

-

Let’s say 100 items come into a stack in order. So 1 is inserted, then 2, then 3, etc. In this case, 100 would be “served” first, then 99, then 98, then 97 and so on. This is helpful for something like an undo system on an application. Every step you make is inserted onto the stack. Then whenever you want to undo, you just “pop” off the top of the stack to go back in time.

-

With this pattern, we now have what is known as a “Last in First Out (LIFO)” relationship. This is also commonly referred to as a “Last Come, First Served(LCFS)” relationship.

-

Going along with the terminology. These are some key terms to know when working with stacks and queues.

-

Pop - To remove an element from a stack. (Can sometimes be used for queues).

-

Push - To insert an element onto a stack. (Can sometimes be used for queues).

-

Enqueue - To insert an element onto a queue

-

Dequeue - To remove an element from a Queue.

-

FIFO - Means “First in First Out” It’s the technical term for a queue-like data structure.

-

LIFO - Means “Last In First Out”. It’s the technical term for a stack-like data structure.

-

Let’s take a look at how a stack operates through an example:

-

Notice how it looks like we are “stacking” books or trays up. We keep stacking. Then when we want to remove one, we take it off the top. We never remove from anywhere else in a stack!

-

Now, if we kept popping and pushing in a loop, we would get a situation where number 1 could potentially never be touched again. For this reason, these aren’t typically used for scheduling purposes. Imagine if you used a stack to run your computer. Old windows would be frozen indefinitely as new requests keep getting serviced first.

-

In the next set of lessons, we will cover a data structure that is more apt to deal with situations like this.